July 20, 2023 - by Anish Purohit

The surge in popularity of prominent Open AI language models like ChatGPT, GPT-3, DALL-E, and Google Bard has sparked a significant buzz around the term “prompt engineering” in the market. Although its concept existed even before the emergence of these large language models, the interest has grown manifold post-ChatGPT.

This blog aims to delve into the importance of prompt engineering in the context of generative AI models. We will explore strategies that can yield optimal results in a limited number of trials, saving time and enhancing the quality of search outcomes.

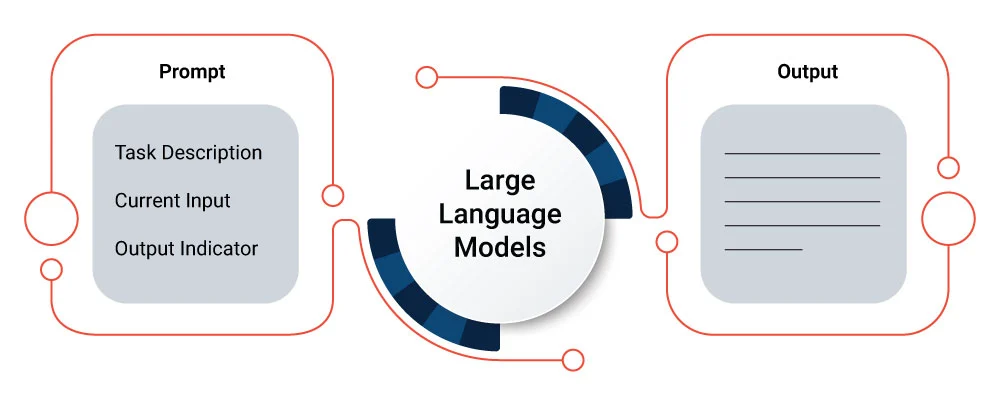

It can be defined as providing explicit instructions or cues to AI models, helping them generate more accurate and relevant results. Prompt engineering is being utilized, either directly or indirectly, even before the term itself gained recognition. For instance, organizations use various strategies to optimize results when utilizing Google search. From using quotation marks to ensuring specific keywords appear on the first or second page.

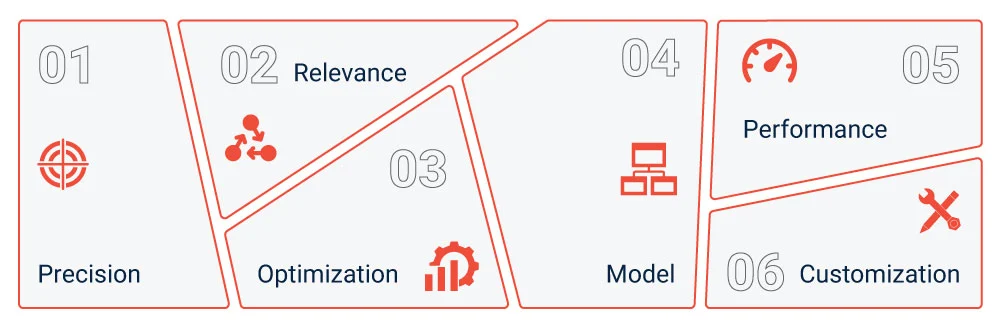

A concept in Artificial Intelligence, prompt engineering is increasingly being used to develop and optimize language models (LMs) for a wide variety of applications and research topics. Developers can use it to better understand the capabilities (and limitations) of large language models (LLMs). They can also increase the capacity of LLMs on a wide range of common and complex tasks and design robust techniques for AI-based applications. The six pillars of prompt engineering include precision, relevance, optimization, model, performance, and customization. Let’s go into further detail below:

Open AI language models mimic human behavior; therefore, the precision of results is an important aspect of prompt engineering. Precision ensures that the generated responses are reliable, error-free, and meet the specific requirements of the prompt engineering task.

Open AI LMs are trained on vast datasets, so whenever the user asks a question, the answer must have coherence. Relevance in prompt engineering ensures that the instructions provided to the AI model align with the intended task or goal at hand.

Open AI responses should be efficient and fast, especially in scenarios where real-time or near-real-time responses are required. Optimized prompts can help reduce the computational resources needed and improve the inference speed, enabling more efficient deployment of AI systems.

The term Model in prompt engineering is a core component responsible for generating responses based on the input prompts. The model learns from data to understand language patterns and context, enabling the AI system to produce accurate and relevant outputs. Prompt engineers ensure the model’s performance, adaptability, and continuous improvement through fine-tuning and optimization, leading to effective response generation in AI language models.

Performance is crucial in prompt engineering as it directly impacts the quality and effectiveness of AI language models. A well-performing model generates accurate, relevant, and contextually appropriate responses, enhancing user satisfaction and trust.

Any AI system needs to be customizable and adaptable to specific tasks or domains. Customization ensures that the generated responses are highly relevant, accurate, and aligned with the specific needs and requirements of the prompt engineering application.

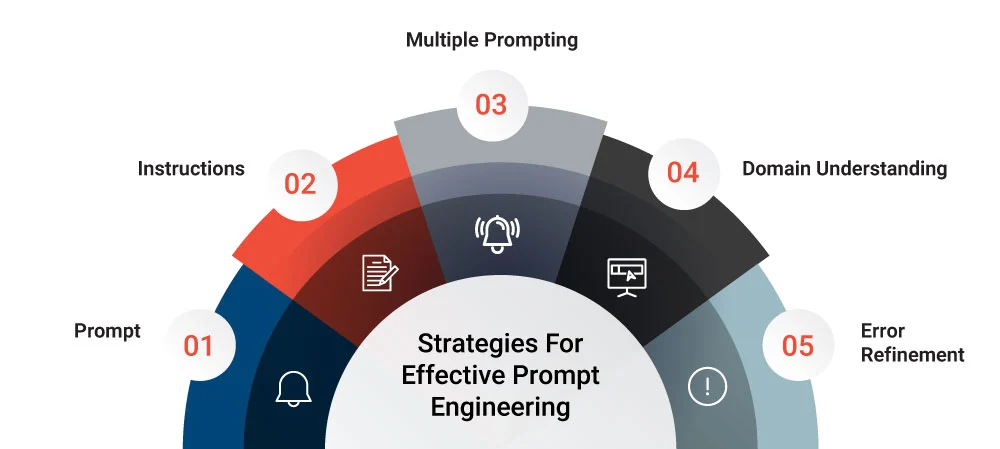

Strategies are crucial in prompt engineering as they provide a systematic approach to achieving desired outcomes and optimizing AI language models. Effective strategies ensure precision, control, and relevance in generating responses, leading to accurate and contextually appropriate outputs. By following well-defined strategies, prompt engineers can save time, enhance performance, and address ethical considerations.

Here are a few steps or strategies that can help in getting the desired results:

Before you embark on the prompt engineering journey, it is crucial to have a clear understanding of the task. Identify what specific tasks you want the AI model to perform and specify the format and desired results. For example, you can use DALL-E to get image-generated models in ChatGPT to give text-based outputs.

After having a blueprint of the output, emphasize providing clear and specific instructions to the model. Discuss all possible techniques for crafting unambiguous prompts that leave no room for misinterpretation. Provide examples of effective instruction design that yields desired model behavior. The emphasis should be on using meaningful keywords with appropriate significance rather than merely the number of words employed.

Each AI language model application may have distinct requirements. By experimenting with multiple prompts, you can explore different approaches and formats to find the most effective and impactful prompts. These steps help prompt engineers to optimize model performance, improve response quality, and achieve the desired outcomes.

Bias awareness and mitigation are essential to ensure fair and ethical AI language model outputs. By actively addressing biases, prompt engineers can strive for equitable and inclusive responses, promoting the ethical use of AI technology and avoiding potential harm or discrimination.

Domain-specific prompts are essential in prompt engineering as they provide customized instructions and context that align with a specific field or industry. By incorporating domain-specific terminology and constraints, these prompts enhance the accuracy and relevance of AI model outputs within the designated domain. This approach ensures that the AI models possess specialized knowledge, enabling them to produce more precise and contextually appropriate responses for domain-specific tasks.

Iterative refinement and error analysis paves the way for continuous improvement of AI language models. Through iterative refinement, prompt design can be adjusted based on the analysis of model outputs, leading to enhanced performance and accuracy. Error analysis helps identify common errors, biases, and limitations, allowing prompt engineers to make necessary corrections and improvements to optimize model behavior.

Prompt engineering is increasingly being used for developing LLMs and augmenting their capabilities with domain knowledge and external tools. Key industries include eCommerce, healthcare, market and advertising, education, and customer service. Here’s how prompt engineering is used across each of these industries:

Prompt engineering can be utilized in the e-commerce industry for personalized product recommendations, chatbots for customer support, and content generation for product descriptions or marketing materials.

In the healthcare industry, prompt engineering can be applied for patient interaction and support, medical data analysis, and generating personalized treatment plans or medical reports.

Prompt engineering can play a role in generating compelling ad copies, personalized marketing campaigns, sentiment analysis for brand perception, and chatbots for customer engagement.

Prompt engineering has applications in education for intelligent tutoring systems, automated essay grading, language learning support, and generating educational content or quizzes.

In the realm of customer service, prompt engineering can be employed to enhance experience through chatbots, automated email responses, and personalized support.

As the interest in open AI language models increases, prompt engineering is helping improve model performance, enhance response quality, and enable better user experiences. But prompting techniques vary depending upon LLM models such as GPT-3, DALL-E, and ChatGPT used.

To drive the best prompt engineering outcomes, engaging with expert engineers who possess in-depth knowledge of the underlying mechanisms of large-scale Open AI models is critical. Skilled resources have an intricate understanding of these models, allowing for efficient troubleshooting and resolution of issues.

Anish Purohit is a certified Azure Data Science Associate with over 11 years of industry experience. With a strong analytical mindset, Anish excels in architecting, designing, and deploying data products using a combination of statistics and technologies. He is proficient in AL/ML/DL and has extensive knowledge of automation, having built and deployed solutions on Microsoft Azure and AWS and led and mentored teams of data engineers and scientists.