In the era of rapid technological advancement and the AI revolution, there’s one aspect of the digital landscape that demands our utmost attention – cybersecurity. As organizations embrace the endless possibilities of AI, cybercriminals are equally leveraging their capabilities to orchestrate sophisticated attacks.

In this blog, we delve into the groundbreaking fusion of AI and cybersecurity, exploring how this synergy is reshaping the battle against modern-day cyber threats and attacks.

What Is AI in Cybersecurity?

AI in cybersecurity swiftly assesses countless events like zero-day vulnerabilities and pinpoints suspicious actions that might result in phishing or harmful downloads. It consistently collects data from the company’s systems and learns from experience. This data is carefully examined to uncover patterns and identify new types of attacks.

How Is AI Used in Cybersecurity?

AI in cybersecurity is used to analyze vast amounts of risk data and the connections between threats in your enterprise systems. This aids human-led cybersecurity efforts in various aspects like IT asset inventory, threat exposure, control effectiveness, breach prediction, incident response, and internal communication about cybersecurity.

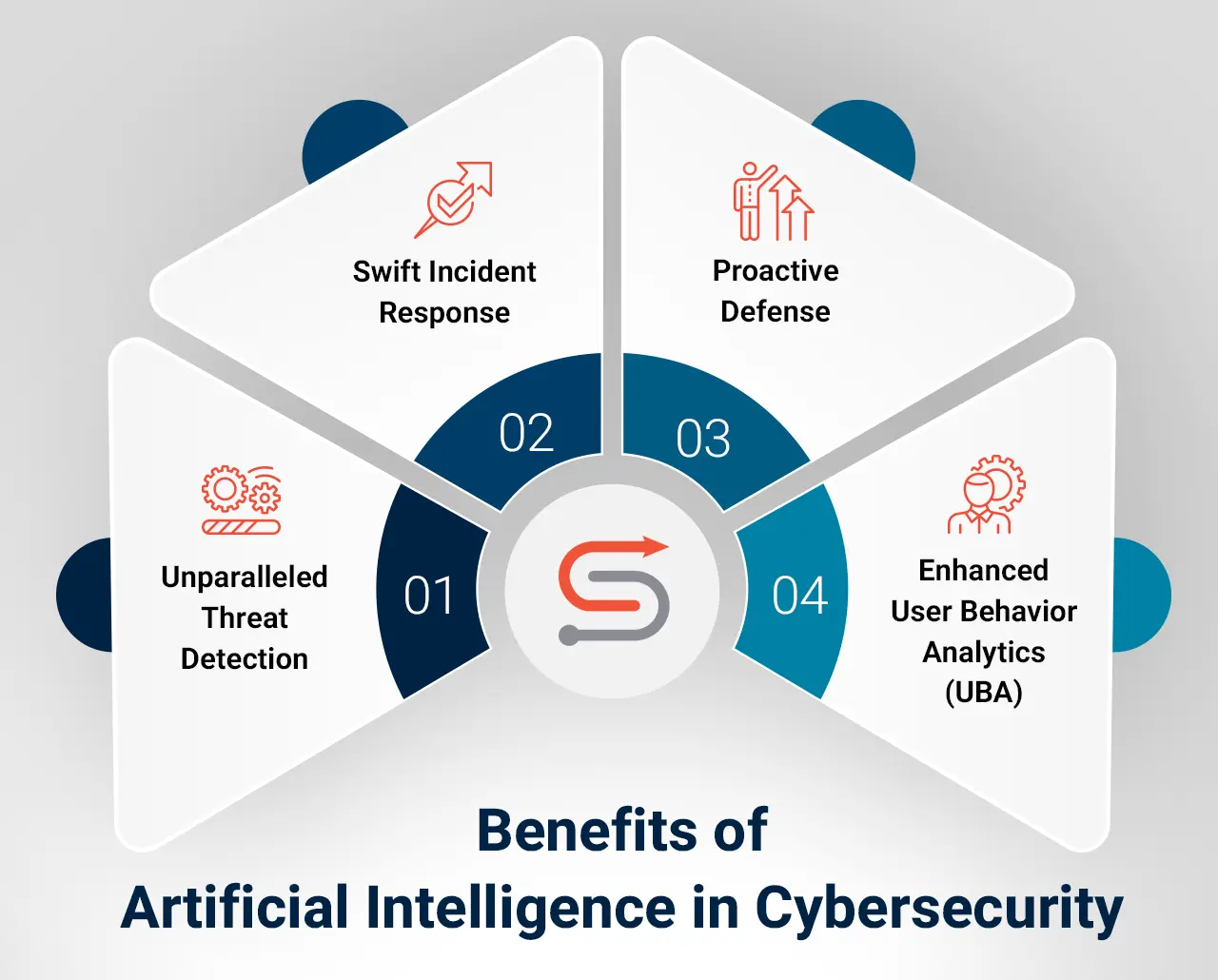

What are the Benefits of Artificial Intelligence in Cybersecurity

AI-driven cybersecurity solutions are revolutionizing the way organizations defend their sensitive data and digital assets. And it’s not just the big companies benefiting from AI in cybersecurity. The technology is easily assessable to even small organizations, allowing for less expensive and more comprehensive security options in the face of today’s threats.

That said, here are a few benefits of AI in cybersecurity:

1. Unparalleled Threat Detection

The sheer volume and complexity of cyber threats demand an advanced approach to detection. Traditional signature-based methods struggle to keep up with the pace with which new threats emerge. Enter AI-driven threat detection, a game-changing solution.

AI’s ability to process vast volumes of data and recognize patterns enables early detection of cyber threats. This includes zero-day attacks and advanced persistent threats (APTs). By analyzing enormous datasets and identifying patterns indicative of malicious activities, AI can detect threats that would otherwise go unnoticed. Unlike traditional methods, which rely on predefined rules, AI’s adaptive learning allows it to evolve and continuously improve its threat detection capabilities.

2. Swift Incident Response

In the ever-accelerating digital landscape, every second counts when responding to cyber incidents. AI excels at automating repetitive tasks, such as incident validation and containment, freeing up human resources for more critical decision-making.

AI can instantly triage, validate, and contain threats, enabling security teams to act swiftly, minimizing potential damage, and limiting the spread of attacks.

By streamlining incident response processes, organizations can significantly reduce their mean time to respond (MTTR), a critical metric that directly impacts the scope of a cyber-attack.

3. Proactive Defense

Reactive cybersecurity strategies are no longer sufficient to combat modern-day threats. AI’s predictive capabilities can identify potential vulnerabilities in an organization’s cybersecurity infrastructure. Decision-makers can then implement pre-emptive measures, fortifying their defenses before cybercriminals strike.

Using AI, organizations can adopt a proactive defense approach. They can analyze historical data, identify weak points, and predict potential areas of exploitation. Armed with this intelligence, cybersecurity professionals can take necessary measures to address vulnerabilities before they are exploited by cybercriminals.

4. Enhanced User Behavior Analytics (UBA)

Human error remains one of the most significant challenges in cybersecurity. AI adds an additional layer of protection by continuously monitoring user behavior across an organization’s network. By establishing baseline user activity, AI can quickly detect deviations indicative of suspicious or malicious actions, empowering security teams to take immediate action and prevent potential data breaches.

AI-powered UBA can identify anomalous user behavior, such as insider threats or unauthorized access attempts. This level of scrutiny ensures that sensitive data remains protected from internal and external risks.

What are the Challenges of Artificial Intelligence in Cybersecurity?

In the rush to capitalize on the AI hype, programmers and product developers may overlook some of the threat vectors. Since the likelihood of making unintentional errors is high, here are some pitfalls to steer clear of:

1. Adversarial AI

As AI becomes more prevalent in cybersecurity, cybercriminals are quick to adapt and deploy their own AI-driven tactics. Cybercriminals are increasingly leveraging AI to evade detection and launch more sophisticated attacks.

Adversarial AI involves crafting attacks specifically designed to bypass AI-based security systems. It poses a significant challenge to the effectiveness of AI-driven cybersecurity solutions, requiring constant vigilance and countermeasures to stay one step ahead of cybercriminals.

These attacks aim to exploit vulnerabilities in AI algorithms and confuse them into misclassifying malicious activities as benign. Such tricking of AI systems can lead to potential blind spots in cybersecurity defenses.

2. Bias and Fairness

AI’s ability to learn from historical data makes it a powerful tool, but it also makes it susceptible to biases present in that data. If unchecked, this could lead to unfair treatment of certain users or demographics, impacting the efficacy of cybersecurity measures. Biased data can also lead to discriminatory outcomes, affecting decision-making in cybersecurity.

For instance, biased AI algorithms may flag certain user behaviors as suspicious or risky based on factors like race or gender, leading to potential ethical and legal issues. To combat bias in AI, decision-makers must prioritize fairness, diversity, and transparency in their AI-driven cybersecurity implementations.

3. Lack of Explainability

AI’s complexity can sometimes make it difficult to understand how it arrives at specific decisions or classifications. Deep learning models, for example, consist of multiple layers of interconnected nodes, making their decision-making process less transparent. Such opacity can be a concern when identifying how certain security decisions are made.

This lack of explainability raises concerns in critical areas like cybersecurity, where understanding how AI reaches its conclusions is essential for ensuring its accuracy and avoiding potential biases.

4. High-Volume False Positives

While AI has made significant strides in reducing false positives, it is not entirely immune to generating them. AI-driven cybersecurity systems may generate a significant number of false positives, potentially overwhelming security teams and leading to missed real threats amid the noise.

High volumes of false positives can overwhelm cybersecurity teams, diverting their attention from genuine threats and creating operational inefficiencies. Striking the right balance between accurate threat detection and minimizing false positives remains an ongoing challenge in AI-driven cybersecurity.

Artificial Intelligence is Just One Tool in Your Cybersecurity Toolkit

It’s not clear if AI is the end-all be-all answer to help us mitigate future cybersecurity threats. But one thing is certain: we need all the help we can get. Projections suggest will hit $10.5 trillion annually by 2025. Having trouble putting that number into perspective?

With this massive threat looming, it’s hard not to turn to AI to act as a sentry for security protocols. While it’s doubtful AI and its machine learning underpinnings are the cure-all for corporate cybersecurity, it can play a crucial role in a well-rounded security system.

Interested in leveraging the power of AI and cybersecurity? Contact an expert at Synoptek today.

Contributor’s Bio

Anish Purohit is a certified Azure Data Science Associate with over 11 years of industry experience. With a strong analytical mindset, Anish excels in architecting, designing, and deploying data products using a combination of statistics and technologies. He is proficient in AL/ML/DL and has extensive knowledge of automation, having built and deployed solutions on Microsoft Azure and AWS and led and mentored teams of data engineers and scientists.