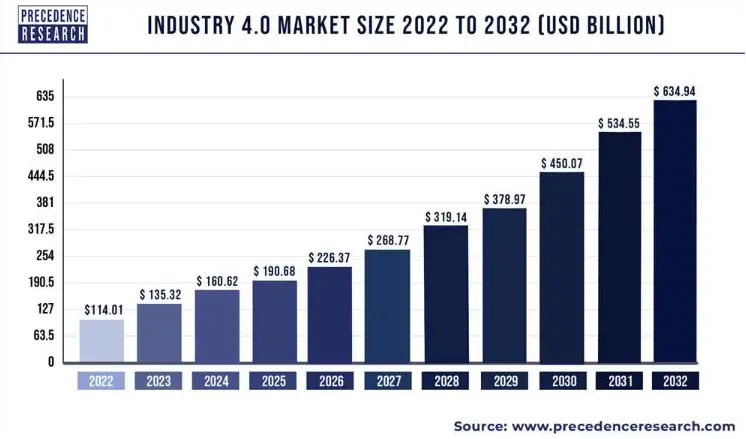

Intelligent factories have become synonymous with manufacturing. According to recent research, the smart factory market is expected to reach $256.6 billion by 2032. Today, every manufacturing facility needs to exploit capabilities across Generative AI (Gen AI), the Internet of Things (IoT), automation, machine learning, big data analytics, etc., to survive and thrive.

Microsoft Cloud for Manufacturing empowers manufacturing organizations with several capabilities to realize their intelligent factory dream. At the Hannover Messe 2024, Microsoft unveiled a range of AI features to help manufacturers modernize service operations, increase supply chain visibility, and deliver end-to-end personalization.

Read on as we discuss the rise of Industry 4.0, the challenges, and how the latest AI capabilities from Microsoft Cloud for Manufacturing set the foundation for smart factories.

The Rise of Industry 4.0 in Manufacturing

Continuous digital transformation, changing customer expectations, and the growing focus on sustainable manufacturing have made intelligent factories a global phenomenon. Intelligent systems, automated processes, and tools that learn and adapt in real-time enable manufacturers to improve production efficiency, minimize downtime, and reduce costs.

Source: Precedence Research

However, manufacturers face numerous challenges that impede their intelligent factory goals, including:

- Improving overall equipment effectiveness and reducing operational and maintenance costs.

- Ensuring compliance with constantly evolving data privacy and security, sustainability, and labor laws.

- Extending and scaling factories to meet new requirements without significant investments in operational technologies.

- Striking a delicate collaboration balance between humans and machines while optimizing resource utilization.

- Overcoming data and departmental silos to improve production visibility and transparency.

- Modernizing legacy infrastructure to overcome security loopholes and adapt to market changes – without expensive replacements.

- Addressing the growing skills gap and aging workforce challenge to keep up with the pace of competition.

The Role Microsoft Cloud for Manufacturing Plays

In today’s manufacturing landscape, siloed data and systems hinder efficiency and decision-making in many ways. They must stay ahead of new trends and challenges to act with unprecedented agility, build an industrial transformation roadmap, and achieve sustainability and revenue goals.

Microsoft Cloud for Manufacturing offers the capabilities today’s organizations need to lay the groundwork for an intelligent factory. Offering a whole new range of Generative AI features, it allows the manufacturing workforce to unearth faster insights from data using natural language processing and enables frontline decision-making.

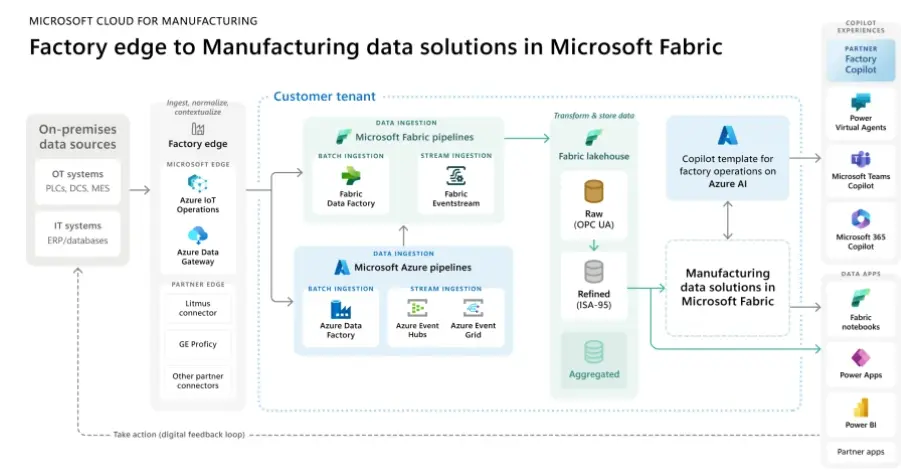

Source: Microsoft

Here are seven benefits Microsoft Cloud for Manufacturing offers via its AI-enabled capabilities:

Better Productivity

Microsoft Cloud for Manufacturing offers a range of Copilot capabilities, allowing organizations to modernize service operations. These capabilities can enhance data insights, boost productivity, and deliver more customization for field service managers. These capabilities are generally available today. They can interact with Copilot to unearth information about work orders, retrieve important data, and reduce time spent sifting through lengthy documents. Additionally, technicians can launch a Dynamics 365 Remote Assist call within Microsoft Teams mobile to get expert guidance and improve first-time fix rates.

Improved Traceability

Microsoft Cloud for Manufacturing collects and stores data from the manufacturing ecosystem, empowering decision-makers with the data they need for intelligent operations. With a growing ecosystem of data connectors, plug-ins, AI capabilities, and APIs, Microsoft Cloud for Manufacturing helps bridge data silos, accelerate transformation, and open new opportunities for innovation.

Enhanced Production

Manufacturing data solutions in Microsoft Fabric enable organizations to uncover power insights across operations. By creating a unified IT-OT data estate, these insights can be used to prepare manufacturing data for AI and optimize production. Conversational assistants can leverage these insights from the factory floor to connect factory ecosystems, drive productivity, and enhance business operations.

Quick Issue Resolution

Microsoft Cloud for Manufacturing allows workers easy access to AI-enabled insights to solve problems without technical help. From which lines had the most defects to the reasons for those defects, workers can use natural language to seek assistance from the Copilot and quickly uncover underlying reasons for issues, spot correlations, and make informed remediation decisions.

Predictive Maintenance

With AI capabilities built into the fabric of Microsoft Cloud for Manufacturing, factory floor managers can predict and prevent maintenance issues. They can automate complex quality inspection processes with AI-powered solutions and simulate real-world scenarios with digital twins.

Supply Chain Optimization

Since Microsoft Cloud for Manufacturing unifies data across systems, it presents a real-time view of the supply chain to gain visibility and avoid disruptions. Organizations can create advanced demand forecasting models using relevant data and optimize their supply chain planning across inventory allocation, fulfillment, and other operations.

Connected Field Service

Microsoft Cloud for Manufacturing offers various mixed reality tools, enabling service agents to deliver personalized service. Real-time remote assistance and interactive instructions allow them to create innovative digital experiences that transform customer engagement.

Waste Reduction

Sustainability is critical to intelligent factories, and Microsoft Cloud for Manufacturing enables environmentally sustainable operations while optimizing energy consumption. Organizations can use AI, IoT, and analytics capabilities to drive new levels of agility, safety, and sustainability. They can reduce emissions and carbon footprint, improve waste and water management, and conduct responsible supply chain practices.

The Koerber Group embraced Microsoft Cloud for Manufacturing to build a service stack that enables greater flexibility and scalability. It helps them better connect the physical and the digital worlds across factory and supply-chain ecosystems and streamline end-to-end production management. Integrating Microsoft Copilot into the solution allows their workers to get the right information to identify and assess the right information and solve problems quickly.

|

Achieve Your Intelligent Factory Goals with Microsoft Cloud for Manufacturing

As data becomes the new fuel for the manufacturing industry, Microsoft Cloud for Manufacturing helps overcome the challenges of siloed, unstructured, and underutilized data. Offering a unified data estate that can connect, enrich, and model data across information technology (IT) and operational technology (OT) systems, it enables easy access and analysis of data for every factory worker.

Leverage emerging capabilities across Generative AI, IoT, and Copilot to improve manufacturing visibility, enhance production, resolve issues, optimize supply chains, and reduce waste. By empowering manufacturers to effortlessly navigate the complexities of modern manufacturing environments and unlock unprecedented data insights, manufacturing data solutions pave the way for more intelligent, efficient, and innovative manufacturing processes.

Are you looking to advance your industrial transformation and achieve sustainability and financial goals?

Watch our on-demand webinar on Supply Chain and Automation Reimagined with Microsoft Cloud for Manufacturing for deeper insights! Or Download our white paper to know more.